The conversation around websites has changed quietly but decisively. A few years ago, having a modern site meant responsive design, clean visuals, and basic performance optimization.

Today, those are table stakes. What differentiates high-performing digital experiences now is how intelligently they adapt, how quickly they respond, and how effortlessly they guide users from intent to action.

In 2026, website development servicesis no longer just a technical function. It sits at the intersection of growth, brand perception, and operational efficiency.

Advances in artificial intelligence, the rise of no-code and low-code platforms, heightened expectations around speed, and a sharper focus on user experience are reshaping how websites are planned, built, and evolved.

This article explores the most important website development trends for 2026, focusing on what actually drives results.

Rather than theory or hype, the emphasis is on practical shifts that influence visibility, engagement, and long-term digital performance.

Why is 2026 Different: Acceleration Factors?

Three catalyzing forces make 2026 a pivot point:

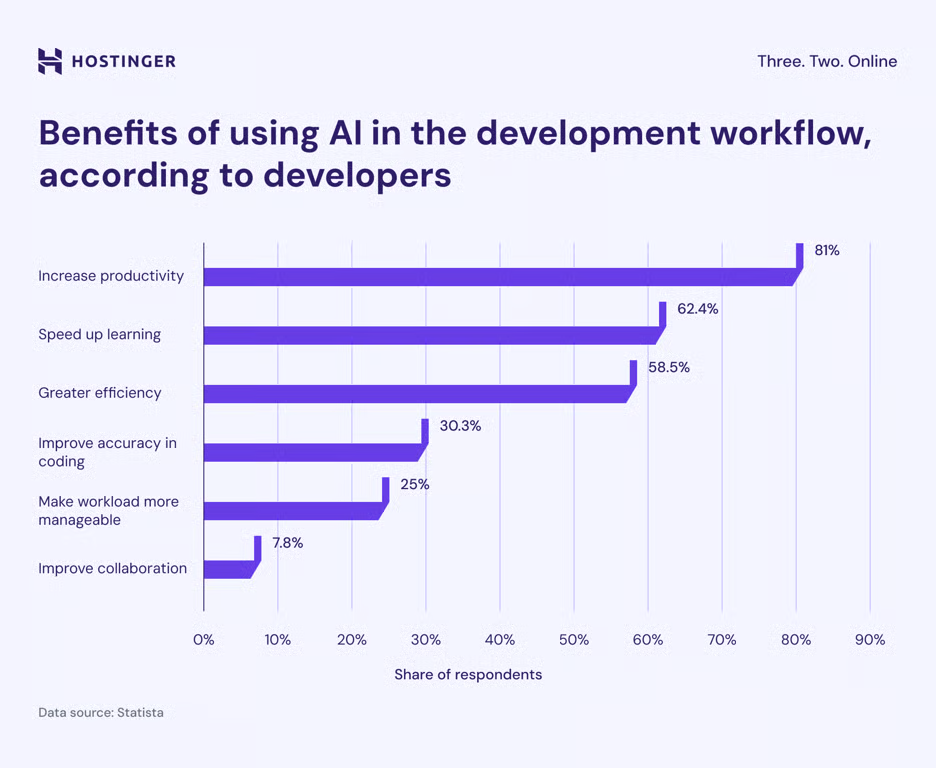

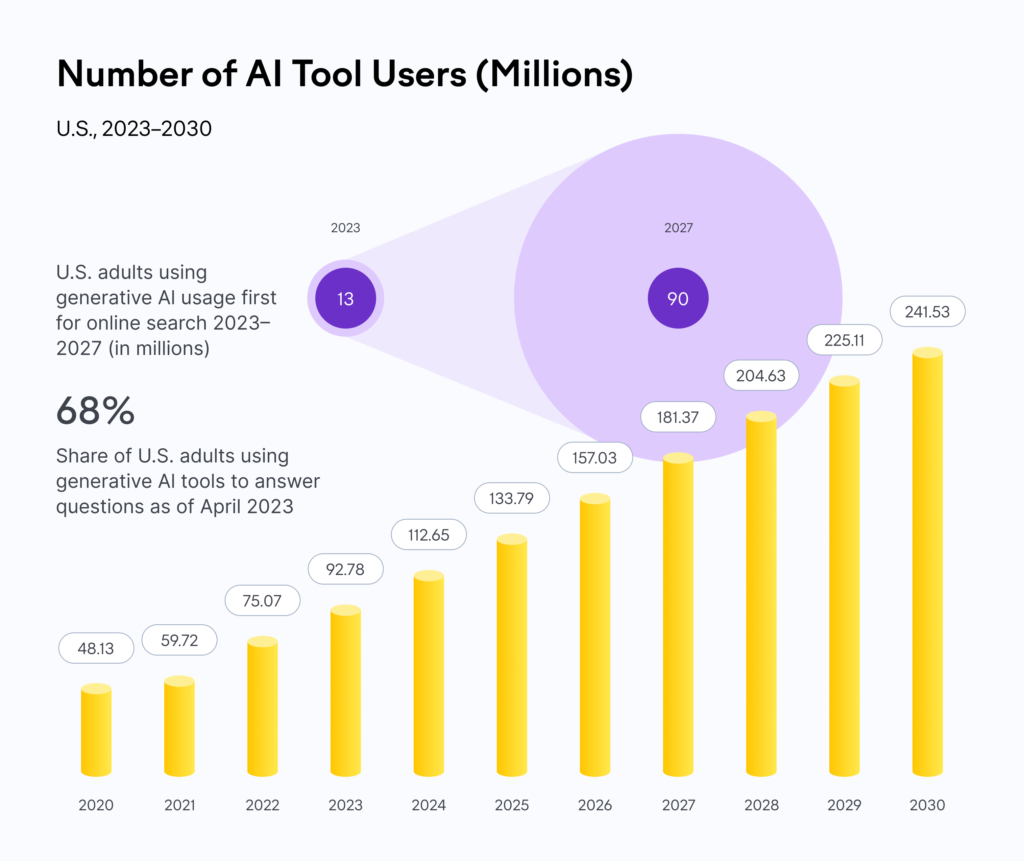

- AI is moving from novelty to daily utility. Developer surveys show AI tools are now used by the majority of builders – this shifts how teams prototype, debug, and scale features.

- Platformization of development. Low-code/no-code platforms now tackle real business applications – not just prototypes – which shortens time-to-market and reduces IT bottlenecks.

- User expectations keep rising. Mobile traffic and immediacy expectations require sites to be fast, resilient, and contextually intelligent at scale.

Trend 1 – AI-driven Development: More Than Autocomplete

What’s changed: in 2026, AI is embedded across the stack (from automated accessibility checks and image optimization to intelligent site search, personalized content recommendations, and developer copilots that speed implementation). Adoption in marketing and product teams is now mainstream.

AI’s role is no longer simply “autocomplete for code.” In 2026, experts recommend thinking of AI across three layers:

- Developer productivity (co-pilot tools): AI speeds boilerplate generation, refactors, and test scaffolding. This reduces repetitive work and frees senior engineers for architecture and quality. Survey data shows high adoption of AI tools in developer workflows.

- Personalization at scale: It increases conversion and customer lifetime value when it’s driven by relevant data and tested models. McKinsey’s analysis shows personalization can boost revenues meaningfully when executed well.

- Design and content automation: Generative models can produce professional web design mockups, microcopy, and image variations that designers iterate on – reducing design cycles without removing the creative judgment humans provide. Various tools now embed AI features that generate layouts, copy, and assets.

- Runtime intelligence: Personalization engines, recommendation layers, and AI search are moving from experimental to core features. Sites that use AI to surface contextually relevant offers, content, or navigation paths keep users engaged for longer and improve funnel metrics.

Actionable: Adopt an AI co-pilot for routine dev tasks, but standardize a human review process for outputs. Treat AI as an accelerant, not a substitute. Validate generated code and content with testing and human oversight.

Trend 2 – Low-Code/No-Code Platforms Become Strategic

What’s changed: Low-code / no-code development platforms stopped being only for internal dashboards – they’re used for campaign landing pages, microsites, and even customer service portals.

Experts report fast market growth and strong enterprise interest. When combined with secure APIs and proper governance, they’re a strategic shortcut.

No-code and low-code platforms have matured. Rather than replacing engineering teams, they’re amplifying them.

How to use it:

- Use low-code for modular, repeatable experiences (campaign builders, internal tools, simple service flows). Keep mission-critical systems on traditional engineering rails.

- Define clear integration contracts and security controls: treat low-code outputs as code artifacts that must meet the same standards for API usage, auth, and monitoring.

- Train a small “citizen developer” community with guardrails (templates, component libraries, accessibility checks).

Actionable: Build a governance model (catalogue, security baseline, CI/CD integration), so low-code projects can graduate to traditional engineering when they need to scale. Use the phrase low code no code development internally to align non-technical stakeholders with the approach, but evaluate vendor lock-in risk carefully.

Trend 3 – Performance and Core Web Vitals are Conversion Multipliers, not just SEO Checkboxes

What’s changed: Google’s Page Experience and Core Web Vitals remain central to both ranking and perceived quality. Importantly, empirical tests repeatedly show that small latency reductions can produce double-digit conversion improvements in many sectors.

Search engines and real users both punish slow sites. Speed is a technical SEO and business KPI.

Why it matters:

- Speed directly affects bounce, engagement, and revenue. A 0.1s improvement in page load can materially lift conversions for travel, commerce, and luxury segments.

- Many sites still fail Core Web Vitals at scale – improving scores is a competitive opportunity.

How to use it:

- Instrument performance as revenue: measure Core Web Vitals, time to interactive, and conversion funnels together.

- Prioritize fast wins: critical image optimization, server-side rendering for key pages, efficient caching, and measurable lazy loading.

- Consider edge delivery (CDN + edge functions) for highly dynamic yet cacheable experiences – it narrows the speed gap across geographies.

Trend 4 – Human-Centric UX: Clarity, Accessibility, and Trust

What’s changed: Design is treated as a revenue instrument. Multiple industry studies show that design and UX investments yield outsized returns – from higher conversion rates to better retention.

In 2026, the UX bar is higher:

- Micro-interactions and reduced decision friction – small, well-timed UI cues drive conversions more consistently than flashy hero sections.

- Accessibility as baseline – WCAG compliance and keyboard/voice interfaces are not optional – intelligent website design opens market access and reduces legal risk.

- Trust signals and data transparency – clearer privacy controls and contextual data use disclosures improve retention.

Actionable: embed UX research in the dev cycle: run small prototype tests, measure task completion and time-to-task, iterate. Use progressive disclosure to minimize cognitive load, and design flows that work with interruptions (mobile users, multitasking).

Trend 5 – Composable & Headless Architectures Win for Scale

What’s changed: “Headless” architectures and composable stacks continue to win for teams that need scalability and multi-touch personalization. At the same time, the ecosystem matured: frameworks like Next.js and serverless patterns make good developer DX and excellent user experience more achievable.

Why it matters:

- A componentized API-driven front end lets teams serve multiple channels (web, apps, kiosks, IoT) from the same content layer.

- It removes monolith constraints – but increases integration responsibilities (caching, orchestration, security).

How to use it:

- Use headless where multi-channel delivery or advanced personalization justifies the complexity. For brochure sites, a modern CMS with SSR may be faster to deliver and easier to maintain.

- Choose frameworks that reduce boilerplate: prefer those with first-class data fetching, streaming, and edge cache integrations to keep latency low. (Hint: evaluate recent framework releases and LTS timelines when choosing a stack.)

- Maintain a small “platform” layer that provides reusable UI components, authentication, and measurement so teams can ship safely.

Trend 6 – Privacy-Aware Personalization (Privacy + Relevance)

What’s changed: Personalization driven by AI and first-party data provides measurable uplift, but privacy rules have changed how data can be used. Companies that win balance strong personalization with simple, transparent consent and a first-party data strategy.

Why it matters:

- Consumers reward relevance, but they will abandon experiences that feel intrusive or opaque.

- Shipping personalization without clear data governance increases legal and reputational risk.

How to use it:

- Start with contextual personalization (session signals + page intent) before integrating cross-device profiles.

- Build an explicit first-party data layer (consented, anonymized, and versioned) and a small experimentation cadence to validate revenue impact.

- Use server-side personalization where possible to avoid exposing user identifiers to third parties.

Actionable: design personalization as a permissioned benefit: ask once, deliver value, and make it easy to opt out. Use edge or server-side logic to avoid privacy leaks through client-side scripts.

Trend 7 – Search Reimagined: AI Search and Discovery

Search is shifting from keyword return lists to AI-assisted conversational retrieval. This alters how content should be structured.

Implication: content that’s semantically rich, well-structured (schema, FAQs, modular content), and answer-focused will be more discoverable by AI search layers. Traditional SEO still matters, but the content unit mix shifts toward concise, authoritative answers.

Actionable: Annotate key pages with structured data, produce short answer snippets for FAQ/knowledge pages, and instrument search performance to measure discovery via AI channels.

Trend 8 – Testing and Observability at the Edge

What’s Changed: The velocity of change (feature releases, content experiments, AI experiments) necessitates stronger observability. Teams must know quickly which change moved which metric.

With distributed architectures and AI-driven UI changes, observability must track from edge to origin.

- What to measure: frontend metrics (Core Web Vitals), custom business events (lead clicks, form completions), and model outputs (accuracy of personalization).

- Why: Businesses must validate that AI-driven changes improve outcomes, not just ship novelty.

Actionable: invest in an observability stack that correlates business outcomes with technical signals and makes dashboards visible to product and marketing teams.

Common Pitfalls (and How to Avoid Them)

1. Using AI without measurement

AI adoption is moving faster than governance in many teams. It’s common to see AI features rolled out because they sound innovative – automated content, chat interfaces, personalization engines – without a clear definition of success.

The risk is subtle but serious. Without measurement, AI becomes a cost center rather than a growth lever. In some cases, it can even degrade the experience by surfacing irrelevant content, inaccurate responses, or inconsistent recommendations.

✅ How to avoid it

Every AI-driven feature should be treated like a product experiment, not a background utility.

- Define one primary business metric before launch: conversion rate, lead quality, engagement depth, or support deflection.

- Track error rates alongside revenue impact. This includes irrelevant recommendations, incorrect responses, or failed automations.

- Establish a comparison baseline (pre-AI vs post-AI) so the impact can be attributed clearly.

- Schedule periodic human reviews to audit outputs, especially for customer-facing interactions.

When AI performance is tied directly to measurable outcomes, it stops being a buzzword and starts behaving like a scalable growth asset.

2. Over-engineering for scale too early

Modern frameworks, headless architectures, and microservices are powerful—but power comes with complexity. A frequent mistake is designing for “future scale” before current needs to justify it.

The result is often slower development cycles, higher maintenance overhead, and a system that’s harder to change, not easier. Teams spend more time managing infrastructure than improving user experience or testing new ideas.

✅ How to avoid it

Scalability should be intentional, not speculative.

- Start by aligning architecture decisions with actual traffic patterns and personalization requirements, not hypothetical future states.

- Use modular systems where components can be swapped or extended later without a full rebuild.

- Favor proven, stable tools over bleeding-edge frameworks unless there’s a clear performance or capability gain.

- Reassess architecture every 6–12 months as usage grows, rather than locking into overly complex systems upfront.

The most resilient platforms in 2026 will be the ones that evolve gradually, not the ones that attempt to solve every future problem on day one.

3. Ignoring performance budgets

Performance often degrades quietly. A new analytics script here, a heavier image there, a third-party tool added for convenience – and suddenly pages feel slower, conversions dip, and engagement drops.

Without performance budgets, these regressions go unnoticed until they start affecting business results.

✅ How to avoid it:

- Define clear performance budgets for key metrics such as Largest Contentful Paint, interaction latency, and total page weight.

- Communicate these budgets across design, development, and marketing teams, so everyone understands the constraints.

- Enforce budgets in CI pipelines, so regressions are flagged before deployment, not after users feel the impact.

- Review third-party scripts regularly and remove anything that no longer delivers measurable value.

When performance is treated as a shared responsibility – not just an engineering concern – it becomes a durable competitive advantage rather than an ongoing firefight.

Conclusion

In 2026, the most effective websites are no longer static digital assets. They operate as adaptive systems – continuously learning, responding, and optimizing based on real user behavior and measurable outcomes.

The shift is subtle but decisive: development decisions now influence visibility, trust, conversion, and operational velocity as directly as marketing or product strategy.

The trends outlined in this article share a common thread. Artificial intelligence accelerates execution but demands governance.

Low-code platforms unlock speed but require structure. Performance, accessibility, and UX are no longer aesthetic considerations; they are multipliers of revenue and engagement.

When speed, UX, AI, and governance work together, websites stop being cost centers and start functioning as durable growth infrastructure.

Wondering how your website can leverage AI, speed, and UX trends to stay ahead in 2026? Contact us to find out.